Swift Concurrency and Legacy Primitives: A Balancing Act

Swift Concurrency, introduced in WWDC21, offers a safer and more efficient approach to asynchronous programming compared to Grand Central Dispatch (GCD). However, integrating Swift Concurrency with older primitives like NSLock and GCD queue requires careful consideration to avoid potential pitfalls.

1. Swift Concurrency vs. GCD

Swift Concurrency distinguishes itself from GCD in its threading model and its approach to handling blocked threads.

Threading Model:

-

GCD: GCD employs a thread-pool model where threads are dynamically created and managed by the system. This can lead to thread explosion, particularly with concurrent queues, where the system might overcommit by creating more threads than CPU cores. GCD dynamically adjusts the number of threads based on system load and available resources.

-

Example:

let queue = DispatchQueue.global(qos: .background)

for i in 0..<100 {

queue.async {

// Perform some lengthy task, such as:

print("Task \(i) is running on thread: \(Thread.current)")

Thread.sleep(forTimeInterval: 2) // Simulate a long-running task

}

}

This code could potentially create 100 threads, even if the device has fewer cores. Each task is dispatched to the global concurrent queue, and GCD might create a new thread for each task, especially if the tasks are CPU-bound or blocking. This can lead to performance issues due to excessive context switching and resource contention.

-

Swift Concurrency: Swift Concurrency, by default, utilizes a cooperative thread pool limited to the number of CPU cores. It uses cooperative multitasking, where tasks voluntarily yield control to allow other tasks to run, thus efficiently utilizing CPU cores and preventing thread explosion.

-

Example:

func lengthyTask() async {

print("Lengthy task is running on thread: \(Thread.current)")

await Task.sleep(nanoseconds: 2_000_000_000) // Simulate a long-running task

}

func anotherLengthyTask() async {

print("Another lengthy task is running on thread: \(Thread.current)")

await Task.sleep(nanoseconds: 1_000_000_000)

}

async let task1 = lengthyTask()

async let task2 = anotherLengthyTask()

await task1

await task2

This code leverages the cooperative thread pool and ensures efficient utilization of CPU cores. When await is encountered, the current thread can be potentially freed up to execute other tasks while waiting for the awaited task to complete. This helps prevent the creation of unnecessary threads and minimizes context switching overhead.

Handling Blocked Threads:

-

GCD: When a thread in GCD is blocked, the system might spin up new threads to handle remaining tasks, potentially exacerbating thread explosion.

-

Example:

let queue = DispatchQueue.global()

queue.sync {

// This blocking operation will halt the current thread

let url = URL(string: "https://www.example.com/large_file.zip")!

let data = try Data(contentsOf: url) // Download a large file (blocking)

print("Downloaded data size: \(data.count) bytes")

}

If this occurs within a concurrent queue with multiple tasks, GCD might create additional threads to continue processing tasks. Downloading a large file can take a significant amount of time, during which the thread executing this code is blocked, waiting for the download to complete. In a GCD-based system, if other tasks are enqueued on the same concurrent queue, GCD might create new threads to handle those tasks, potentially leading to thread explosion. To understand more about Excessive Context Switching & Thread Explosion, take a look at this incredible WWDC explanation at 11:03.

- Swift Concurrency: Swift Concurrency replaces blocked threads with lightweight continuations These continuations track the resumption points of suspended tasks, enabling threads to efficiently switch between work items without the overhead of full thread context switches.

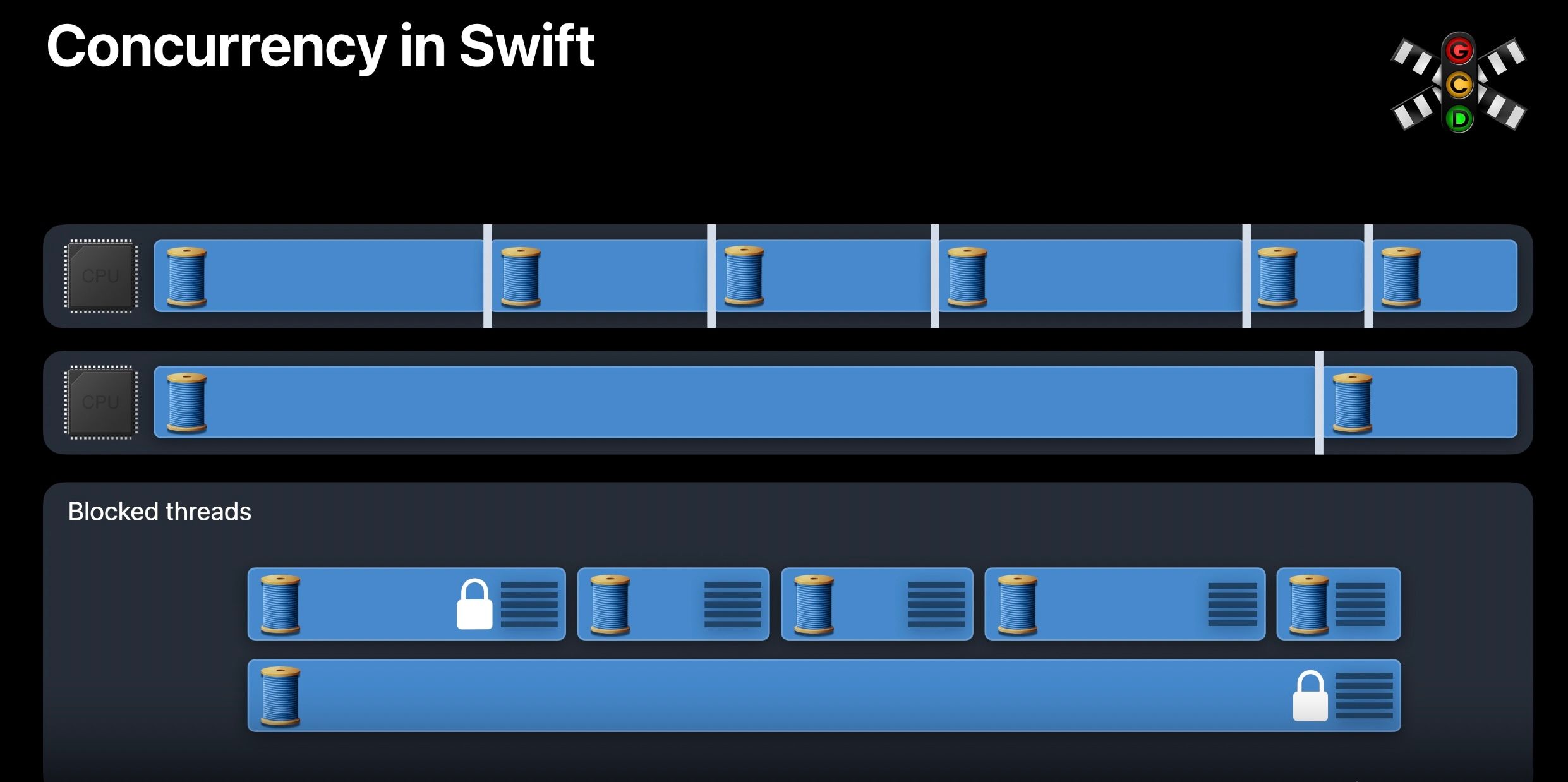

With GCD, when a thread is blocked, many threads will be created and Context Switches occurred

With GCD, when a thread is blocked, many threads will be created and Context Switches occurred

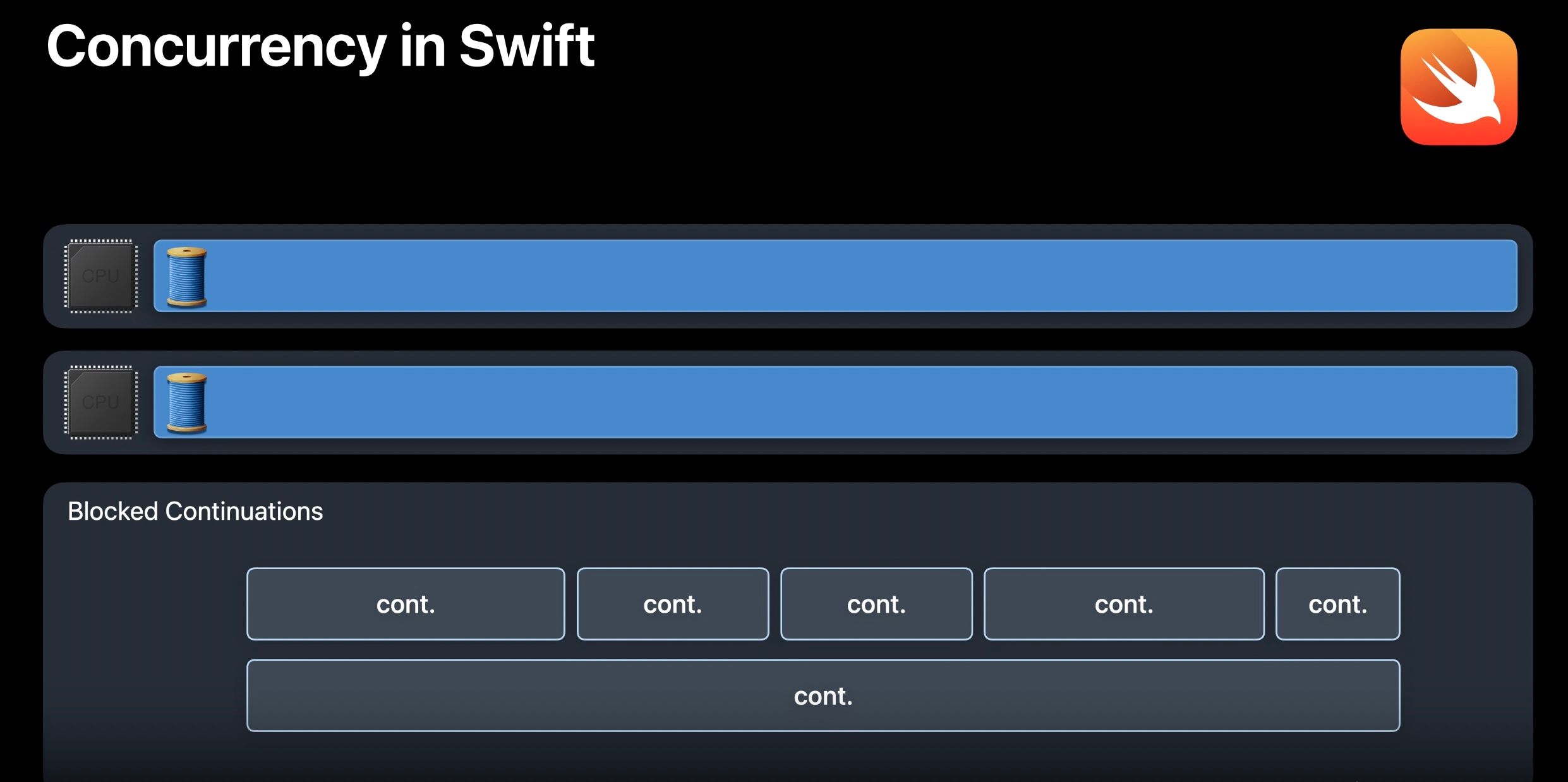

With Swift Concurrency, there will be only one thread for each CPU core

With Swift Concurrency, there will be only one thread for each CPU core

- Example:

let url = URL(string: "https://www.example.com/large_file.zip")!

do {

let data = try await Data(contentsOf: url)

print("Downloaded data size: \(data.count) bytes")

} catch {

print("Failed to download data: \(error)")

}

Using await allows the current thread to be freed while waiting for the file data, avoiding thread blocking and allowing the thread to potentially handle other tasks. The await keyword signals a potential suspension point. When the code execution reaches await the current task can be suspended, and the thread can be used to execute other tasks. Once the awaited operation (downloading the file) is complete, the task is resumed, and the execution continues from the suspension point.

2. Safe Primitives vs. Legacy Primitives

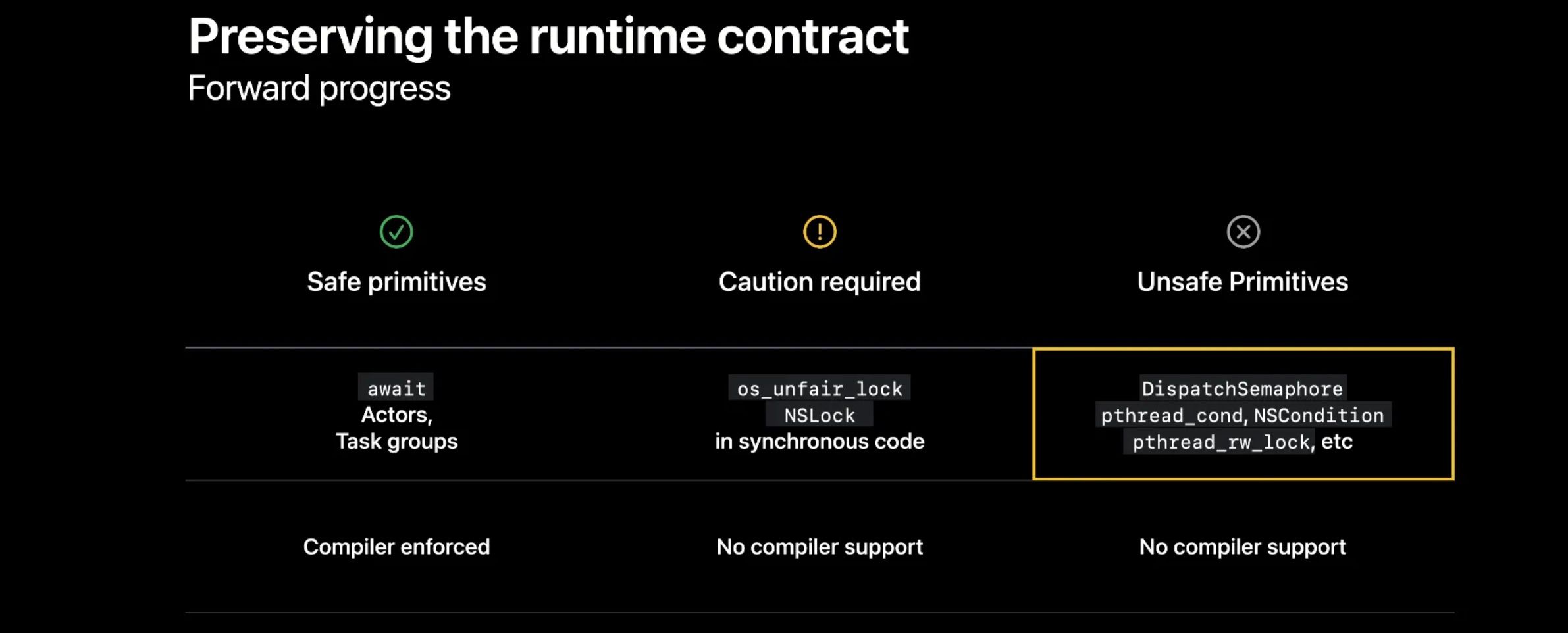

When adopting Swift Concurrency, it's crucial to prioritize performance and understand the implications of await on atomicity. The recommended approach is to utilize safe primitives like await, Actors, and Task groups, which are compiler-enforced. These primitives ensure thread safety and help avoid common concurrency issues.

While primitives like os_unfair_lock and NSLock can be used in synchronous code for short, well-defined critical sections, they require caution. Using them within asynchronous contexts or for long-running operations can lead to deadlocks and violate the runtime contract of forward progress.

Unsafe primitives such as DispatchSemaphore, pthread_cond, NSCondition, and pthread_rw_lock are strongly discouraged. These primitives hide dependency information from the Swift runtime, potentially causing unpredictable behavior and making debugging difficult. To identify the use of unsafe primitives during development, run your app with the environment variable LIBDISPATCH_COOPERATIVE_POOL_STRICT=1. This modified debug runtime helps enforce the invariant of forward progress and can flag potential issues. But we should use Actors whenever possible. They offer a safer and more efficient alternative to locks and serial queues for mutual exclusion. They guarantee that only one method call is executed at a time, protecting the actor's state from data races. Actors also support efficient thread hopping and prioritization, ensuring responsiveness and avoiding priority inversions.

So why shouldn't we use Actors at any time? In reality, there are situations where using these might not be ideal, such as when modifying and reading individual properties within a type such as class... In this case, actors aren't quite the right tool because:

- Granularity of Access: Actors are designed to protect their entire state. Every method call on an actor is executed serially, ensuring that only one thread can access the actor's properties at a time. This is great for preventing data races when working with multiple properties. However, if you only need to synchronize access to a single property, using an actor might introduce unnecessary overhead.

- Order of Operations: You can't guarantee the order in which operations will run on an actor. If you have a sequence of operations that must be performed in a specific order on a single property, using an actor might not be the best solution.

Let's consider an example: Imagine you have a class representing a counter:

class Counter {

private var count = 0

func increment() {

count += 1

}

func decrement() {

count -= 1

}

func getValue() -> Int {

return count

}

}

If you want to ensure that increment and decrement operations are synchronized, using an actor might be an option. However, it will also make reading the value using getValue subject to the actor's serialization, potentially impacting performance if there are frequent read operations. In such cases, alternative synchronization mechanisms like atomics, dispatch queues, or locks can be more appropriate. These mechanisms allow you to synchronize access to specific properties without affecting the entire object's state. For instance, you could use a ManagedAtomic to synchronize access to the count property:

import SwiftAtomics

class Counter {

private var count = ManagedAtomic<Int>(0)

func increment() {

count.wrappingIncrement(ordering: .sequentiallyConsistent)

}

func decrement() {

count.wrappingDecrement(ordering: .sequentiallyConsistent)

}

func getValue() -> Int {

return count.load(ordering: .sequentiallyConsistent)

}

}

Here, ManagedAtomic ensures that increment, decrement, and read operations on the count property are atomic and synchronized, without requiring the entire Counter class to be an actor. Therefore, while actors are powerful tools for concurrent programming, they might not always be the most efficient or flexible solution. When dealing with individual properties that require synchronized access, consider using alternative synchronization mechanisms like atomics, dispatch queues, or locks. Remember to carefully evaluate the trade-offs and choose the approach that best suits your specific needs.

3. Deadlock Scenario: GCD Queues and Swift Concurrency

Problem Description

Integrating Swift Concurrency with GCD can lead to deadlock scenarios, especially when tasks are blocked inside GCD queues. Understanding how GCD queues work and how they differ from Swift Concurrency is crucial to avoid these issues.

GCD Queue

-

Serial Queue: Executes tasks one at a time in the order they are added. This ensures that tasks do not run concurrently and are processed sequentially.

-

Concurrent Queue: Allows multiple tasks to execute simultaneously. Tasks are started in the order they are added, but they can run in parallel and complete in any order. GCD concurrent queues can overcommit by creating more threads than there are available hardware threads to ensure work progresses.

Swift Concurrency

-

Task & Continuation: A Task represents a unit of asynchronous work that can be awaited. Together with Continuation, they allow asynchronous code that doesn't use async/await to be integrated into Swift's concurrency model by capturing the continuation of an operation. In this context, it can be used to integrate GCD.

-

Non-Overcommitting: Swift Concurrency does not expect blocking and will not overcommit CPU cores. This means it efficiently manages resources but can lead to deadlocks if tasks are blocked.

Problem When Swift Concurrency Task Is Blocked Inside GCD Queue

When a Swift Concurrency task is blocked inside a GCD queue, it can lead to a deadlock if the queue is waiting for a thread from the thread pool to dispatch a task, but no threads are available because they are all blocked.

Code Example: Read & Write Patterns Using GCD Concurrent Queue

import XCTest

final class BarrierTests: XCTestCase {

func test() async {

let subsystem = Subsystem()

await withTaskGroup(of: Void.self) { group in

for index in 0 ..< 100 {

group.addTask { subsystem.performWork(id: index) }

}

await group.waitForAll()

}

}

}

final class Subsystem {

let queue = DispatchQueue(label: "my concurrent queue", attributes: .concurrent)

func performWork(id: Int) {

if id % 10 == 0 { write(id: id) }

else { read(id: id) }

}

func write(id: Int) {

print("schedule exclusive write \(id)")

queue.async(flags: .barrier) { print(" execute exclusive write \(id)") } // Asynchronous write with barrier

print("schedule exclusive write \(id) done")

}

func read(id: Int) {

print("schedule read \(id)")

queue.sync { print(" execute read \(id)") } // Synchronous read, blocking the caller

print("schedule read \(id) done")

}

}

Ref: https://forums.swift.org/t/deadlock-when-using-dispatchqueue-from-swift-task/66058/25

Explanation

-

Multiple Synchronous Reads: Allowed with the concurrent queue, blocking the caller.

-

Barrier for Writes: The write operation is dispatched asynchronously with a barrier, ensuring it can only proceed when no other reads or writes are running concurrently.

While the code might not immediately cause a deadlock, it has the potential to do so when:

-

An event triggers multiple read and write operations.

-

A large number of read operations start from a Swift Concurrency context, consuming available threads.

-

A write operation is triggered, making an asynchronous call to the concurrent queue under the .barrier option.

-

The queue waits for a thread from the thread pool to dispatch the write operation, but no threads are available due to blocking reads. Since Swift Concurrency won't overcommit (create more threads), the barrier isn't cleared, leading to a deadlock.

Observations

-

GCD and Swift Concurrency: They seem to share the same thread pools, which can exacerbate the issue.

-

Deadlock Consequences: When this deadlock occurs, subsequent tasks will not be dispatched, leading to program malfunction. The main thread (MainActor) may still work and receive events, but other threads will not make progress.

Here are some rule of thumbs & considerations:

-

Avoid Blocking: Do not block work inside Swift Concurrency, such as using .sync on another GCD queue, especially for future work that is not actively running but enqueued (e.g., async dispatch with a barrier). This violates the runtime contract of "Forward progress" we mentioned before.

-

Limit Task Creation: Avoid creating too many Swift Concurrency tasks using Task blocks or TaskGroup, as unexpected blocking can easily lead to deadlocks, especially when interacting with legacy frameworks.

-

Minimize Mixing: Try to avoid mixing Swift Concurrency and GCD, as it can lead to unexpected behavior and be difficult to debug.

With the discussion so far, we can easily figure out the solution which is simply avoid using the concurrent queue for read-write pattern. Instead, we could use NSLock (with caution) or pthread_rw_lock (not recommended).

4. Summary

Swift Concurrency provides powerful tools for writing safer and more efficient concurrent code. Developers should prioritize the use of safe primitives like await, Actors, and Task groups as they offer compiler-enforced safety and help manage concurrency effectively. While traditional synchronization mechanisms like locks (NSLock, os_unfair_lock) and dispatch queues are still available, it's important to exercise caution when using them in Swift Concurrency. They can introduce deadlock risks if not handled properly, especially when interacting with asynchronous operations.